I have posted at SSRN a short paper on Martti Koskenniemi and human rights, written for an edited volume on his work. Koskenniemi is a distinguished Finnish international law scholar who has argued in several books that international law is indeterminate, and so tends to get harnessed to various political agendas even while its practitioners claim that international law is “neutral.” Koskenniemi focuses on the rhetoric of international legal scholarship; in my paper, I link his indeterminacy arguments about human rights law to the practices of states. If Koskenniemi is right, it would be surprising if states obeyed international human rights law, wouldn’t it?

Is Ukraine doomed?

I mean doomed to lose its autonomy as a nation-state, whether or not its borders remain formally in place. Here are some reasons for thinking that it is:

1. Russia has placed 40,000 troops along its borders. The West has made clear that Ukraine is not worth a war. In the president’s words:

Of course, Ukraine is not a member of NATO, in part because of its close and complex history with Russia. Nor will Russia be dislodged from Crimea or deterred from further escalation by military force.

2. NATO is “suspending cooperation” with Russia, meaning:

Russia could not participate in joint exercises such as one planned for May on rescuing a stranded submarine, a NATO official said.

But, never mind–

Russia’s cooperation with NATO in Afghanistan – on training counter-narcotics personnel, maintenance of Afghan air force helicopters and a transit route out of the war-torn country – [will] continue.

3. Ukraine is deeply in debt to Russia among other countries, and is on the brink of economic ruin. Russia has just increased natural gas prices for Ukraine from $268.50 per 1,000 cubic meters to $385.50.

4. The $18 billion IMF package will help Ukraine pay its debts to Russia, and pay for gas from Russia, at the newly high prices. Think of the IMF package as a subsidy to Russia that counteracts the picayune sanctions.

5. Russia has announced an economic development plan for Crimea that ethnic Russians in eastern Ukraine will look at with envy.

6. Ukraine is deeply divided between East and West. Russia has argued that Ukraine should be given a “federalist” structure, and this proposal may be sensible. As Ilya Somin explains:

Federalism has often been a successful strategy for reducing ethnic conflict in divided societies. Cases like Switzerland, Belgium, and Canada are good examples. Given the deep division in Ukrainian society between ethnic Russians and russified Ukrainians on the one hand and more nationalistic Ukrainians on the other, a federal solution might help reduce conflict there as well by assuring each group that they will retain a measure of autonomy and political influence even if the other one has a majority in the central government. Although Ukraine has a degree of regional autonomy already, it could potentially would work better and promote ethnic reconciliation more effectively if it were more decentralized, as some Ukrainians have long advocated.

But it is predictable that a federal system in which Ukraine effectively consists of two regions–a Ukrainian region and a Russian region–will produce a weak country whose eastern half is dominated by Russia and whose western half will be isolated and alone.

7. Most important, Ukraine has never shown itself able to exist as a viable independent nation. Throughout nearly all of its history, it has been a province of Russia, or divided between Russia and other neighbors. The major period of independence from 1991 to the present–a blink of an eye–has been marked by extreme government mismanagement that has resulted in the impoverishment of Ukrainians relative to Poles, Russians, and other neighbors. In the 1990s, many experts doubted that Ukraine would survive. Now that Russia is back on its feet, their doubts seem increasingly realistic.

Russia has considerable leverage; it will use it.

The Human Rights Council and defamation of religion

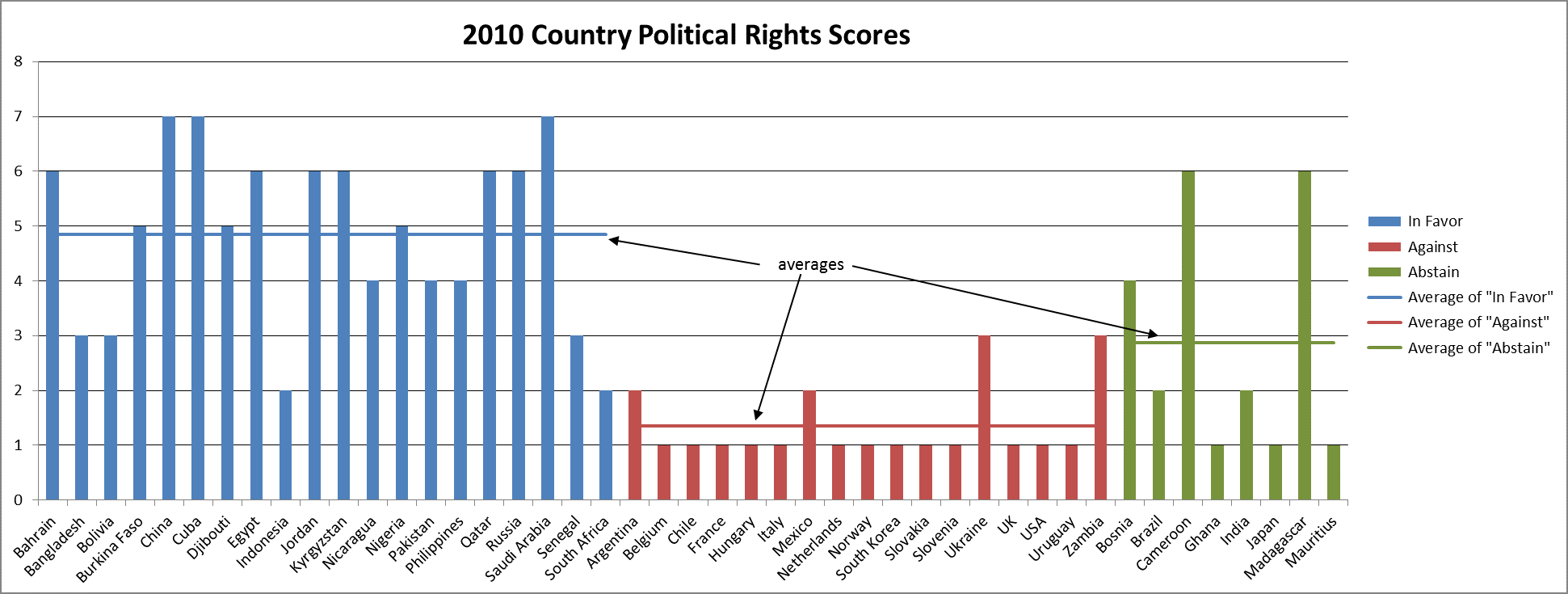

Yesterday I claimed that governments do not take the Human Rights Council seriously. The most famous example is the effort by that body to advance a right against “defamation of religion.” In 2010, a resolution supporting this right was passed by a vote of 20 to 17 with 8 abstentions. (There have been other votes in favor as well, both in the Human Rights Council and the General Assembly.) The graph showing the breakdown of votes by Freedom House score is above. The question for international lawyers is whether western governments like that of the United States are required to recognize a right against defamation of religion because a bunch of authoritarian countries think it should. If not, how exactly should we understand the legal status of the Human Rights Council?

Yesterday I claimed that governments do not take the Human Rights Council seriously. The most famous example is the effort by that body to advance a right against “defamation of religion.” In 2010, a resolution supporting this right was passed by a vote of 20 to 17 with 8 abstentions. (There have been other votes in favor as well, both in the Human Rights Council and the General Assembly.) The graph showing the breakdown of votes by Freedom House score is above. The question for international lawyers is whether western governments like that of the United States are required to recognize a right against defamation of religion because a bunch of authoritarian countries think it should. If not, how exactly should we understand the legal status of the Human Rights Council?

The Human Rights Council and the role of authoritarian countries

Last week the UN Human Rights Council approved a resolution that requires states

Last week the UN Human Rights Council approved a resolution that requires states

to ensure transparency in their records on the use of remotely piloted aircraft or armed drones and to conduct prompt, independent and impartial investigations whenever there are indications of a violation to international law caused by their use.

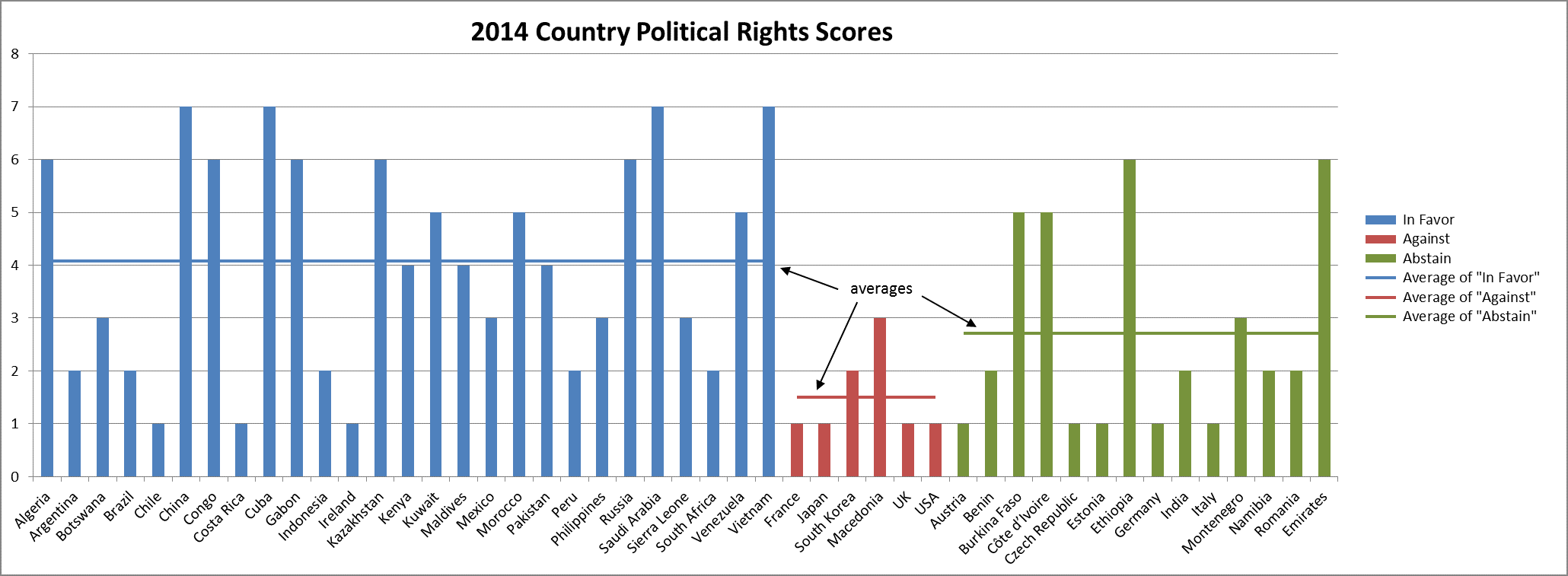

Ryan Goodman discusses the implications of this vote for international law. My view is that it has no implications. The views of the Human Rights Council are rarely taken seriously by governments. To see why, click on the graph pasted above. It reflects a pattern in votes of this type: that the apparently “progressive” resolution is in fact supported by (mainly) authoritarian countries like China and Saudi Arabia, while opposed by liberal democracies. Freedom House ranks countries based on their political rights from 1 (best) to 7 (worst). The average score of the resolution’s backers (the blue bars in the graph) is 4.1, while the resolution’s opponents (red) average 1.5, and the abstainers (green) average 2.7.

Anyone who thinks that resolutions like this one reflect a conscientious effort to interpret international human rights law doesn’t understand how the Human Rights Council operates. I will provide another example tomorrow.

Crimea, sanctions, and secession

In Slate I argue (once more) that the West can’t do much about Crimea. This time I focus on the ineffectiveness of economic sanctions against a large country, and I touch on the question of whether, and how much, the annexation harms western interests or principles. In short, the costs of sanctions are high and the benefits low. If Russia invades the rest of Ukraine, the calculus may change.

The European Court of Human Rights and Freedom House Ratings

This graph (you may need to squint) shows Freedom House political rights scores for countries that belong to the European Court of Human Rights. The blue bar shows their score at the time of accession (if a FH score is available; they go back only to the 1970s), and the orange bar shows the score in 2014. I was curious about how countries have fared in light of Russian and Turkish backsliding, and of a general sense that international human rights is stagnating, but most countries have improved or not changed. A score of 1 is best; 7 is worst. Note that countries are arranged in the order that they joined the Council of Europe; the original members joined in 1949; Portugal in 1976; and Montenegro in 2007.

Jeffrey Sachs on the “crisis of international law”

Sachs argues that there is a crisis in international law, based not only on Russia’s actions in Crimea but on the general disregard of use of forces rules by the United States and other western countries:

As frightening as the Ukraine crisis is, the more general disregard of international law in recent years must not be overlooked. Without diminishing the seriousness of Russia’s recent actions, we should note that they come in the context of repeated violations of international law by the US, the EU, and NATO. Every such violation undermines the fragile edifice of international law, and risks throwing the world into a lawless war of all against all.

Sachs’ argument raises a number of questions:

(1) Is there a crisis in international law? And if so, did it start with Russia’s intervention in Crimea (as some people might argue), or earlier with U.S. and European actions going back 10-15 years?

(2) Sachs traces the crisis back to the 1999 Kosovo intervention. But the sorts of illegal uses of forces he describe go back very far, for example, the 1989 intervention in Panama, or the 1983 intervention in Grenada, or the 1979 Soviet invasion of Afghanistan. There is a sense in which the use of force rules have been in crisis since their (modern inception) in the UN charter in 1945. Why draw the line at 1999?

(3) Sachs implies that western illegality paved the path to Russia’s violation of international law. Is it true that if (for example) NATO had not illegally intervened in Kosovo or Iraq, then Russia would not have illegally intervened in Crimea? Is international law a “fragile edifice” that can be undermined by violations, or do the violations just tell us that existing rules are not well tailored to states’ interests? What of the argument that the Kosovo intervention, while illegal, stopped an even worse form of illegality, the ethnic cleansing of thousands of civilians?

(4) What does it mean for international law to be “in crisis”? That it is ignored? A better definition might be that states hold onto the law, they refuse to declare it defunct and try to rationalize their actions as legal, but they frequently violate it. A crisis exists not just because the rules are violated but because states can’t agree on a set of rules to replace them, generating uncertainty and misaligned expectations that could lead to war.

Sachs concludes that the United States should turn to the Security Council to address the Crimea crisis. But note the paradox: Because Russia enjoys the veto, it can immunize itself from Security Council action, and thus continue to violate international law without facing a (formal) legal sanction. The route to peace might circumvent the Security Council. It may be that you can have law or peace, but not both.

Should Ukraine have kept its nuclear weapons?

After the collapse of the Soviet Union, Ukraine inherited a huge nuclear arsenal, which it subsequently gave up. In return it received assurances from Russia, the United States, and the United Kingdom that its territorial integrity would be respected. These assurances were embodied in the Budapest Memorandum of 1994. While the United States and the UK complied with that agreement by not invading Ukraine, Russia did not.

What if Ukraine had retained its nuclear arsenal? It seems more than likely that Russia would not have invaded Crimea. Putin might have calculated that Ukraine would not have used its nuclear weapons in defense because then Ukraine would itself have surely been obliterated by Russia. But the risk of nuclear war would have been too great; Putin would have stayed his hand. (However, it is possible that Ukraine would have been forced to give up its nuclear weapons one way or the other long before 2014.)

So between meaningless paper security assurances and nuclear weapons, the latter provides a bit more security. One implication of the Crimea crisis may be the further unraveling of the nuclear nonproliferation efforts that President Obama has made the centerpiece of his foreign policy.

My non-testimony at the PCLOB

Last week, I was supposed to testify before the Privacy and Civil Liberties Oversight Board, but wasn’t able to because of a flight delay. My written statement is here. My panel was asked to answer two questions.

1. Does International Law Prohibit the U.S. Government from Monitoring Foreign Citizens in Foreign Countries?

I said “no.” The U.S. government has long taken the position that the relevant treaty–the International Covenant on Civil and Political Rights, which includes a right to privacy–does not apply to conduct abroad, based on article 2(1), which says “Each State Party to the present Covenant undertakes to respect and to ensure to all individuals within its territory and subject to its jurisdiction the rights recognized in the present Covenant.” Some NGOs and other countries disagree, but even if they’re right, it’s hard to imagine that surveillance targets like foreign citizens or government officials, fall within U.S. “jurisdiction.” And then the ICCPR doesn’t define “privacy,” and the treaty has never been understood to block monitoring of this type, as far as I know. That is why the Germans have recently proposed a new treaty, an optional protocol to the ICCPR, that would limit surveillance.

2. Should the United States Afford All Persons, Regardless of Nationality, a Common Baseline Level of Privacy Protection?

“No” again. Why should it? No other country that has the capacity to engage in surveillance respects the privacy of Americans. If nothing else, read this statement by Christopher Wolf, who describes the foreign surveillance laws and policies of other countries. Here is an excerpt about French law:

The 1991 [French] law is comparable to FISA in that it provides the government with broad authority to acquire data for national security reasons. Unlike FISA, however, the French law does not involve a court in the process; instead, it only involves an independent committee that only can recommend modifications to the Prime Minister. In addition, France’s 1991 law is broader than FISA in that it permits interceptions to protect France’s “economic and scientific potential,” a justification that is lacking in FISA.

The Kosovo precedent

There are actually two Kosovo precedents: (1) the 1999 war, and (2) the 2008 declaration of independence. Putin cites both–the first for the military intervention in Crimea, the second for the subsequent secession/annexation of Crimea.

Focusing on the first, much of the debate has turned on whether Crimea is like Kosovo, with western critics arguing that the Kosovo intervention was justified by humanitarian considerations not present in Crimea. The problem with this argument–if understood as a legal argument–is that no one believes that the Kosovo intervention was legal. The U.S. government has not made such a claim. Here is an account from a state department lawyer named Michael Matheson:

NATO decided that its justification for military action would be based on the unique combination of a number of factors that presented itself in Kosovo, without enunciating a new doctrine or theory. These particular factors included: the failure of the FRY to comply with Security Council demands under Chapter VII; the danger of a humanitarian disaster in Kosovo; the inability of the Council to make a clear decision adequate to deal with that disaster: and the serious threat to peace and security in the region posed by Serb actions.

This was a pragmatic justification designed to provide a basis for moving forward without establishing new doctrines or precedents that might trouble individual NATO members or later haunt the Alliance if misused by others. As soon as NATO’s military objectives were attained, the Alliance quickly moved back under the authority of the Security Council. This process was not entirely satisfying to all legal scholars, but I believe it did bring the Alliance to a position that met our common policy objectives without courting unnecessary trouble for the future.

Matheson could have argued that the UN Charter’s flat ban on the use of military force should be interpreted away, perhaps in light of the Charter’s reference to human rights or some such thing. The UK would make such an argument. The U.S. never did. It still does not. The translation of Matheson’s exquisitely tortured statement is that we broke the law; we won’t do it again; and you better not, either.

Putin may be forgiven his skepticism about the “we won’t do it again” claim. The U.S. would do it again in 2003 (Iraq) and (arguably) 2011 (Libya). It did it before in 1989 (Panama) and 1983 (Grenada). Jack Goldsmith says, plausibly, “International law drops out because both actions were illegal, leaving only a fight over ‘legitimacy,’ which is even more in the eye of the beholder than legality.”

We can go farther. The U.S. did not advance a humanitarian intervention exception to the ban on the use of force because it did not believe that such an exception served its interests. It would open the door to other countries intervening whenever they believed humanitarian considerations justified intervention, leading to a surely impossible debate about what conditions constitute a humanitarian emergency and depriving the Security Council veto of its value. Russia and China also rejected the humanitarian intervention exception. So it could never become a part of international law.

Can the United States nonetheless argue that, while it broke the law in Kosovo and never paid a penalty, now, henceforth, it and everyone else must follow it? The problem with this argument is that the current use of force rules embodied in the UN charter can be mutually acceptable to all countries only in fact if all countries follow them. If only the United States can break them by citing “pragmatic” considerations, the United States alone possesses a veto. Russia, China, and the rest need to decide whether Kosovo was a one-off event or not. They appear quite rationally to have concluded that it was not one-off, and that the United States doesn’t take seriously the UN rules. And so they will not, either.

“Russian lawmakers ask President Obama to impose sanctions on them all”

The reverse sanctions on Russia

Did I say that the sanctions imposed on Russian officials would end up rewarding them by enhancing their public standing? Well, here, from AFP, is the evidence:

US lawmakers ‘proud’ to be blacklisted in Russia row

Washington — US lawmakers scoffed at sanctions imposed on them by Russia Thursday, saying it was a point of pride to be on President Vladimir Putin’s blacklist.

…”Proud to be included on a list of those willing to stand against Putin’s aggression,” Boehner wrote on Twitter.

…“It doesn’t have to be this way, but if standing up for the Ukrainian people, their freedom, their hard earned democracy, and sovereignty means I’m sanctioned by Putin, so be it,” he [Robert Menendez] said.

And Senator John McCain, a fierce Kremlin critic who says Putin has long aimed to rebuild the Russian empire, also chimed in.

“I’m proud to be sanctioned by Putin — I’ll never cease my efforts & dedication to freedom & independence of #Ukraine, which includes #Crimea,” tweeted McCain, who visited Ukraine last weekend with other senators.

Now just imagine the corresponding article in a Russian newspaper.

Obama, the realist

Stephen Walt, the distinguished Harvard proponent of “realism” in international relations argues that the Crimea debacle confirms the value of realism by showing how Obama’s liberal internationalist instincts led him astray:

To be sure, ousted president Viktor Yanukovych was corrupt and incompetent and the United States and the European Union didn’t create the protests that rose up against him. But instead of encouraging the protesters to stand down and wait for unhappy Ukrainians to vote Yanukovych out of office, the European Union and the United States decided to speed up the timetable and tacitly support the anti-Yanukovych forces. When the U.S. assistant secretary of state for European and Eurasian Affairs is on the streets of Kiev handing out pastries to anti-government protesters, it’s a sign that Washington is not exactly neutral. Unfortunately, enthusiastic supporters of “Western” values never stopped to ask themselves what they would do if Russia objected.

Walt makes a number of astute points–the chief one being that Russia has strong security interests in Ukraine while the United States does not–but his conclusion is exactly backwards. The West in fact did virtually nothing to encourage democratic forces in Ukraine. The United States offered virtually no aid–$1 billion in loan guarantees, which is pocket change. And the reason was that the United States did not care what happened in Ukraine, for all the reasons Walt gives. The West could not have “encourag[ed] the protesters to stand down”–that would have been politically impossible–and even if it had, and they had, Putin would still have seized Crimea. To believe otherwise, you would have to take seriously Putin’s claim that he objected to the illegality of the removal of Yanukovych, when in fact what he really cared about was losing Ukraine to the West. If handing out pastries to protesters was our way of showing support for democracy, then I rest my case.

And while it is hard to call the annexation of Crimea a foreign policy “success,” the do-nothing response of the United States is exactly the correct response from Walt’s realist perspective. If we have little interest in Ukraine, we have literally zero interest in Crimea, a poor, out-of-the-way place. In fact, as Walt hints, it is most likely that Russia has violated realist tenets, not the United States, with Putin reacting to domestic political pressures or perhaps acting recklessly by risking war for a peninsula that Russia already effectively controlled. And so our major goal should be to ensure that we respond rationally rather than emotionally to the annexation by not letting it interfere with areas of potential cooperation with Russia. By imposing meaningless sanctions on Russia, that is what Obama, a Waltian realist, is doing.

Vladimir Putin, international lawyer

From his speech to the Duma (with my annotations in brackets):

However, what do we hear from our colleagues in Western Europe and North America? They say we are violating norms of international law. Firstly, it’s a good thing that they at least remember that there exists such a thing as international law – better late than never. [So there!]

Secondly, and most importantly – what exactly are we violating? True, the President of the Russian Federation received permission from the Upper House of Parliament to use the Armed Forces in Ukraine. However, strictly speaking, nobody has acted on this permission yet. Russia’s Armed Forces never entered Crimea; they were there already in line with an international agreement. True, we did enhance our forces there [without entering?]; however – this is something I would like everyone to hear and know – we did not exceed the personnel limit of our Armed Forces in Crimea, which is set at 25,000, because there was no need to do so. [But Russian forces appear to have roamed about Crimea in violation of this agreement as well as the UN Charter.]

Next. As it declared independence and decided to hold a referendum, the Supreme Council of Crimea referred to the United Nations Charter, which speaks of the right of nations to self-determination [true]. Incidentally, I would like to remind you that when Ukraine seceded from the USSR it did exactly the same thing, almost word for word. Ukraine used this right, yet the residents of Crimea are denied it. Why is that? [Why indeed?]

Moreover, the Crimean authorities referred to the well-known Kosovo precedent – a precedent our western colleagues created with their own hands in a very similar situation, when they agreed that the unilateral separation of Kosovo from Serbia, exactly what Crimea is doing now, was legitimate and did not require any permission from the country’s central authorities. Pursuant to Article 2, Chapter 1 of the United Nations Charter, the UN International Court agreed with this approach and made the following comment in its ruling of July 22, 2010, and I quote: “No general prohibition may be inferred from the practice of the Security Council with regard to declarations of independence,” and “General international law contains no prohibition on declarations of independence.” Crystal clear, as they say. [I’m afraid so.]

I do not like to resort to quotes, but in this case, I cannot help it. Here is a quote from another official document: the Written Statement of the United States America of April 17, 2009, submitted to the same UN International Court in connection with the hearings on Kosovo. Again, I quote: “Declarations of independence may, and often do, violate domestic legislation. However, this does not make them violations of international law.” [Right.] End of quote. They wrote this, disseminated it all over the world, had everyone agree and now they are outraged. Over what? The actions of Crimean people completely fit in with these instructions, as it were. For some reason, things that Kosovo Albanians (and we have full respect for them) were permitted to do, Russians, Ukrainians and Crimean Tatars in Crimea are not allowed. Again, one wonders why.

We keep hearing from the United States and Western Europe that Kosovo is some special case. What makes it so special in the eyes of our colleagues? It turns out that it is the fact that the conflict in Kosovo resulted in so many human casualties. Is this a legal argument? The ruling of the International Court says nothing about this. [True; it is legally irrelevant.] This is not even double standards; this is amazing, primitive, blunt cynicism. One should not try so crudely to make everything suit their interests, calling the same thing white today and black tomorrow. According to this logic, we have to make sure every conflict leads to human losses. [The U.S. position is that forcing Kosovo’s population to remain a part of a country whose government tried to massacre it would be wrong, and numerous efforts were made to broker a compromise before secession took place. Putin argues that it would be ridiculous to make Crimea wait for its population to be massacred before seceding.]

I will state clearly – if the Crimean local self-defence units had not taken the situation under control, there could have been casualties as well. [This is doubtful, as there were no massacres anywhere else in Russian-speaking Ukraine that did not benefit from “local self-defense units”.] Fortunately this did not happen. There was not a single armed confrontation in Crimea and no casualties. Why do you think this was so? The answer is simple: because it is very difficult, practically impossible to fight against the will of the people. Here I would like to thank the Ukrainian military – and this is 22,000 fully armed servicemen. I would like to thank those Ukrainian service members who refrained from bloodshed and did not smear their uniforms in blood.

Other thoughts come to mind in this connection. They keep talking of some Russian intervention in Crimea, some sort of aggression. This is strange to hear. I cannot recall a single case in history of an intervention without a single shot being fired and with no human casualties. [But because the military force was overwhelming.]

Colleagues,

Like a mirror, the situation in Ukraine reflects what is going on and what has been happening in the world over the past several decades. After the dissolution of bipolarity on the planet, we no longer have stability. Key international institutions are not getting any stronger; on the contrary, in many cases, they are sadly degrading. [True] Our western partners, led by the United States of America, prefer not to be guided by international law in their practical policies, but by the rule of the gun. [Hmm] They have come to believe in their exclusivity and exceptionalism [ahem], that they can decide the destinies of the world, that only they can ever be right. They act as they please: here and there, they use force against sovereign states, building coalitions based on the principle “If you are not with us, you are against us.” To make this aggression look legitimate, they force the necessary resolutions from international organisations, and if for some reason this does not work, they simply ignore the UN Security Council and the UN overall. [Hmm]

In other words, we did not act illegally but if we did, you did first. The subtext, I think, is that the United States claims for itself as a great power a license to disregard international law that binds everyone else, and Russia will do the same in its sphere of influence where the United States cannot compete with it.

The toothless sanctions on Russia

The U.S. and EU imposed asset freezes and travel bans on a handful of Russian and Ukrainian officials connected to the Crimean secession. Most of them are mid-level people; a few are Putin aides. They did not impose asset freezes and travel bans on Putin or other big shots, nor did they impose sanctions on Russia itself.

Why not? You can easily imagine that if Putin had been sanctioned, he would not be able to back down or make concessions–because then it would look as if he betrayed Russia for personal financial reasons. But the same logic applies to the mid-level people, who now must redouble the aggressiveness of their stance on Ukraine. And because the sanctions were imposed on a handful of people rather than all of Russia, the Russian government can easily compensate the sanctioned individuals for any losses that they have sustained. Indeed, probably their public standing has increased as the sanctions will make them look important to the Russian public who are probably learning the identities of some of them for the first time.

So the sanctions aren’t even toothless: they reward rather than punish the wrongdoers. They are best seen as a signal, the weakest possible signal, one that indicates that we will accept Crimea if you go no farther. I suspect that it would have been more sensible to send this signal by imposing a weak sanction on all of Russia than to single out these people who will be perceived as heroes who have made sacrifices for the motherland.

Easterly’s The Tyranny of Experts

William Easterly argues that efforts to help poor countries achieve economic growth have gone astray because western experts impose top-down recipes for growth (a kind of Stalinist approach that mixes hubris and incompetence):

William Easterly argues that efforts to help poor countries achieve economic growth have gone astray because western experts impose top-down recipes for growth (a kind of Stalinist approach that mixes hubris and incompetence):

The conventional approach to economic development … is based on a technocratic illusion: that the belief that poverty is a purely technical problem amenable to such technical solutions as fertilizers, antibiotics, or nutritional supplements.

The problem, as Easterly shows in this book and in his previous books (including the terrific White Man’s Burden), is that these technical solutions fail because western experts rarely understand the intricacies of the local environment, and, more important, the politics and institutions in the countries they seek to help. Often, technically flawless development projects fail because of corruption and abuse in the country that is being helped. The gleaming power plant operates for a few years and then falls into disrepair because the absence of an effective legal system that enforces property and contract rights makes it impossible to collect bills or protect against squatters.

The solution? It turns out to be human rights:

What you can do [about global poverty] is advocate that the poor should have the same rights as the rich…. This assertion of the rights of the poor is needed now more than ever…. This books argues [that] an incremental positive change in freedom will yield a positive change in well-being for the world’s poor.

Easterly does not explain what any of this means. Which rights should we advocate? How should we insist that they be implemented? What should we do to governments that refuse to take our advice? I suspect that if he gave these questions some thought, he would realize that any serious effort to compel or bribe poor countries to recognize rights would look like the development activities that he criticizes. Indeed, his bête noir, the World Bank, famously tried to implement “rule of law” projects that were supposed to enhance rights. These projects failed for all the reasons that all the other development projects failed.

Easterly provides no evidence that if we advanced human rights in poor countries, well-being in those countries, or even respect for rights, would improve. In fact, there isn’t any.

Fragile by Design, by Calomiris & Haber

Fragile by Design, by Charles Calomiris and Stephen Haber, is a great book. The authors argue that the stability and efficiency of financial systems in different countries depend on political bargains that set the rules of the game. Authoritarian countries produce inefficient state-owned banking systems because governments cannot commit not to expropriate. But democracies produce a wide range of outcomes, depending on the configuration of constituencies and interest groups. The United States is cursed with a highly unstable banking system because local interests have been able to ensure a huge number of local unit banks that were insufficiently diversified. This system finally broke down thanks to the inflation of the 1970s, but our further misfortune was a political bargain between activist groups and large banks in the 1990s that resulted in a system where banks were encouraged to reduce underwriting standards so as to extend credit to low-income people. Meanwhile, countries like Canada were lucky enough that the initial political bargain at a national level led to a small number of stable and efficient banks that weathered the financial crisis of 2008.

Fragile by Design, by Charles Calomiris and Stephen Haber, is a great book. The authors argue that the stability and efficiency of financial systems in different countries depend on political bargains that set the rules of the game. Authoritarian countries produce inefficient state-owned banking systems because governments cannot commit not to expropriate. But democracies produce a wide range of outcomes, depending on the configuration of constituencies and interest groups. The United States is cursed with a highly unstable banking system because local interests have been able to ensure a huge number of local unit banks that were insufficiently diversified. This system finally broke down thanks to the inflation of the 1970s, but our further misfortune was a political bargain between activist groups and large banks in the 1990s that resulted in a system where banks were encouraged to reduce underwriting standards so as to extend credit to low-income people. Meanwhile, countries like Canada were lucky enough that the initial political bargain at a national level led to a small number of stable and efficient banks that weathered the financial crisis of 2008.

There are a huge number of moving parts and epicycles (why was our unstable system so stable from 1936-1980?, and what explains the success of activists like ACORN?), but the book is nonetheless enormously illuminating, and contains the most powerful and concise account of the causes of the 2008 crisis that I have seen.

Goodbye, Crimea

It doesn’t matter that the referendum did not allow voters to express a preference for the status quo, that many of the 90+ percent who favor annexation by Russia (according to (possibly questionable) exit polls) may have been trucked in, that international election monitors were not used, that ballot boxes may have been stuffed, that Tatar groups refused to participate, that the public debate was drowned out by pro-Russian propaganda, and that Russian soldiers and/or pro-Russia militias roamed the streets. It is sufficient that there wasn’t violence, that western journalists were free to move about and interviewed plenty of ordinary people who strongly favored annexation, that there were enthusiastic public demonstrations in favor of annexation and celebrations after the result was announced, and that the outcome is consistent with demographic realities and what seems plausibly (to us ill-informed westerners) the preference of most Crimeans. Unless large groups of Tatars and ethnic Ukrainians take to the streets to protest the referendum and are clubbed by riot police, any western effort at this point to try to rescue Crimea from the invaders it embraces will be not only pointless but ludicrous. The West is now in the impossible position of being pro-democracy and arguing that Crimea should be returned to Ukraine against the will of the people. Even if the referendum was all theater, it was effective theater.

It doesn’t matter that the referendum did not allow voters to express a preference for the status quo, that many of the 90+ percent who favor annexation by Russia (according to (possibly questionable) exit polls) may have been trucked in, that international election monitors were not used, that ballot boxes may have been stuffed, that Tatar groups refused to participate, that the public debate was drowned out by pro-Russian propaganda, and that Russian soldiers and/or pro-Russia militias roamed the streets. It is sufficient that there wasn’t violence, that western journalists were free to move about and interviewed plenty of ordinary people who strongly favored annexation, that there were enthusiastic public demonstrations in favor of annexation and celebrations after the result was announced, and that the outcome is consistent with demographic realities and what seems plausibly (to us ill-informed westerners) the preference of most Crimeans. Unless large groups of Tatars and ethnic Ukrainians take to the streets to protest the referendum and are clubbed by riot police, any western effort at this point to try to rescue Crimea from the invaders it embraces will be not only pointless but ludicrous. The West is now in the impossible position of being pro-democracy and arguing that Crimea should be returned to Ukraine against the will of the people. Even if the referendum was all theater, it was effective theater.

The image above, from Wikipedia, shows the demographic composition of Ukraine as of 2001. Blue means an area where the majority speaks Ukrainian; red means an area where the majority speaks Russian. Doesn’t this suggest a forgone conclusion once Putin made his move?

The Crimean secession vote

In an earlier piece, I said

If a fair vote is held, and Crimeans vote overwhelmingly to join Russia, then any Western effort to stop them will be seen as an attempt to thwart the will of the people, a violation of their right to self-determination, which is enshrined in the U.N. charter and multiple human rights treaties.

I didn’t actually think it likely that a fair vote would be held; I was instead trying to avoid discussing the more complicated case where a a fair vote is not held. (Some might call this qualification lawyerly; others, weaselly.) In any event, it is becoming increasingly clear that a fair vote will not be held, as discussed in this NYT article and this National Interest piece by former ambassador to Ukraine, John Herbst. I also received this illuminating email from a Ukrainian-American student in the United States (who continues to visit and do work in Ukraine):

If you follow the Russian and Ukrainian language press as well as Crimean groups on social-networking sites (such as SOS_Krym), you already realize that large scale attempts at voter fraud are under way. Several of my friends in Crimea (this has been verified by reports throughout the peninsula) have been visited by unidentified individuals who either make off with their passports or damage them. This just so happens to coincide with an announcement by Sevastopol city authorities that any form of photo ID will be accepted during the referendum, given what has been happening to passports. This is a clear invitation to “Russian tourists”, many of whom have already created problems in Donetsk and Kharkov.

Interestingly, this is voter fraud on TOP of voter fraud since the ballot itself, absurdly, presents residents of Crimea with two options – join Crimea or seek independence – without any space for a “no” vote on either of those options. All under the watchful eye of Russian special forces and “local self-defense militias.” Does that sound like a legitimate referendum to you? How can this referendum be legitimate if it doesn’t accurately reflect the will of the people, and how can it accurately reflect the will of the people when it is being carried out under these types of circumstances?

MOREOVER, the government which is calling for this referendum was installed by those very same Russian spetsnaz and approved by Yanukovych (at that point no longer in charge of anyone or anything). Aksyonov, the current head of the Crimean administration, leads a party called “Russian Unity” and received 4% of the vote in the last elections. He is a fringe figure and I can assure you (as someone who worked in the region) that before all of this started, Aksyonov and his ilk were regarded as nothing more than a joke by domestic and international observers alike. Is a referendum planned by an illegitimate government with no support…legitimate?

Finally, I would urge you to rethink the implication of the following: “Crimea’s ties with Russia go back centuries. It was transferred from Russia to Ukraine only in 1954 while both countries were regions of the Soviet Union. This transfer reflected a top-down administrative judgment, not the sentiments of the Ukrainian or Crimean peoples.” Your implication leads to a slippery slope because, as you well know, Crimea belonged to the Crimean Khanate (present day Crimean Tatars) long before the Russian Empire controlled the region. If we’re going all the way back to the 1950s, why use 1783 as our historical reference point? If historical precedent is what we’re really looking at, perhaps we should transfer the land to the descendants of the Scythians? Or the descendants of the Romans – the Italian state? Crimea has passed hands so many times that attempting to find a legitimate government somewhere in the folds of time is a futile endeavor, at best.

… One more thing – I’m not sure if the Western press has published anything about this, but the leader of the Crimean Tatar Mejlis (the Mejlis is described by Crimean Tatars as a “body of local self-government” in Crimea – the Mejlis has offices in nearly every corner of the Crimea and lays claim to representing the interests of Crimean Tatars) called on Crimean Tatars to boycott the referendum. Refat Chubarov (leader of the Mejlis) voiced many of the same concerns I did about Russian tourists coming to Crimea and voting in the referendum. He also deemed this referendum illegitimate due to ballot design and the fact that 400,000 more ballots have been printed than there are residents in Crimea. This is all in addition to the fact that the government of Crimea is not legitimate and that the referendum is taking place under the watchful eye of Russian special forces, but that goes without saying. So, in effect, anywhere from 12-15% of Crimea’s population will most likely be boycotting this referendum.

So the political and international-law implications of an unfair referendum cannot be avoided. I hope to address them after the referendum has been held, and the extent of the unfairness can be gauged.

Goldman on Bitcoin

Business Insider exaggerated when it announced that Goldman completely obliterates Bitcoin in a new report. The report includes interviews with Bitcoin supporters. And while the Goldman analysts are skeptical that Bitcoin could serve as a currency—the view of nearly everyone nowadays—they do not rule out a role in the payments system. Currently, merchants pay 2-3 percent of purchase price to accept electronic payments. Bitcoin service providers charge 1 percent. But as the Goldman analyst notes, much of the cost of the current payment system is attributable to security and legal requirements that Bitcoin providers will eventually need to confront. Merchants who use bitcoin pay an additional 1 percent to exchanges in order to avoid exchange rate risk. Traditional payments system will also reduce costs in response to competition from Bitcoin. However this all works out, the long-term effect of Bitcoin will not be anarchist utopia but slightly lower prices—you may end up paying $100 rather than $101 for an item you buy over the web.

Business Insider exaggerated when it announced that Goldman completely obliterates Bitcoin in a new report. The report includes interviews with Bitcoin supporters. And while the Goldman analysts are skeptical that Bitcoin could serve as a currency—the view of nearly everyone nowadays—they do not rule out a role in the payments system. Currently, merchants pay 2-3 percent of purchase price to accept electronic payments. Bitcoin service providers charge 1 percent. But as the Goldman analyst notes, much of the cost of the current payment system is attributable to security and legal requirements that Bitcoin providers will eventually need to confront. Merchants who use bitcoin pay an additional 1 percent to exchanges in order to avoid exchange rate risk. Traditional payments system will also reduce costs in response to competition from Bitcoin. However this all works out, the long-term effect of Bitcoin will not be anarchist utopia but slightly lower prices—you may end up paying $100 rather than $101 for an item you buy over the web.

Other thoughts:

Bitcoin miners with 51 percent of the computer power over the Bitcoin network control the supply: they can decide to increase it. Question: don’t they have strong incentives to undersupply bitcoins—that is, to vote against increasing the supply to the social optimum while hording bitcoins—in order to maximize their profits, like De Beers?

Bitcoin’s legal problems are just beginning. An interview with a pair of lawyers reveals a potentially huge regulatory web that legitimate bitcoin institutions will need to navigate. Once bitcoin futures come into existence in sufficient volume, the CFTC will step in. We already know about money laundering laws, which require bitcoin services to keep tabs on customers and report suspicious transactions. The SEC has gotten into the act because of efforts to combine Bitcoin and securities. State regulatory agencies may require Bitcoin-related companies to obtain licenses akin to those that money transmitters like banks must obtain, which are costly. It also seems likely that Bitcoin services will, like existing money transmitters, be required to keep funds on hand to compensate customers if their bitcoins are lost—a further cost. Not discussed, but also worth considering, is the possibility that people will try to manipulate the bitcoin market—as I suggested above—necessitating another layer of regulatory scrutiny.