I comment at The New Republic on allegations that President Obama is acting like a monarch. Incidentally, I thought the debate about whether George W. Bush was Hitler or merely Caesar or perhaps Napoleon was also phony, so maybe I lack credibility.

I comment at The New Republic on allegations that President Obama is acting like a monarch. Incidentally, I thought the debate about whether George W. Bush was Hitler or merely Caesar or perhaps Napoleon was also phony, so maybe I lack credibility.

All posts by Eric Posner

Thomas McGarity’s Freedom to Harm

Via a helpful review by Daniel Farber, I found out about this book, which is a much-needed one. I have searched in vain for some time for an overall assessment of deregulation in the United States. Unfortunately, if the remit of McGarity’s beloved Consumer Protection Safety Commission extended to books, this one would have to be recalled.

Via a helpful review by Daniel Farber, I found out about this book, which is a much-needed one. I have searched in vain for some time for an overall assessment of deregulation in the United States. Unfortunately, if the remit of McGarity’s beloved Consumer Protection Safety Commission extended to books, this one would have to be recalled.

McGarity argues that the deregulation movement arose from a conspiracy between business interests and right-wing intellectuals, who hoodwinked Congress and the public. In fact, deregulation was largely a bipartisan movement that started in the Carter administration, and reflected an emerging consensus that many (but not all) regulations did more harm than good–in particular, rate regulation. McGarity barely discusses or discusses not at all airline, trucking, and railroad deregulation of the 1970s, which generally has received high marks, or the resistance of business interests to some forms of deregulation–all of this contrary to this thesis. He is certainly right that a lot of deregulation went too far–notably financial deregulation–but because he refuses to provide a realistic baseline for determining whether deregulation benefits or harms the public, he provides no reasonable method for distinguishing between good deregulation and bad deregulation or, for that matter, good regulation and bad regulation.

Instead, he resorts to anecdotes. One of the weakest chapters discusses transportation safety, and he includes some distressing anecdotes of terrible accidents that he blames on deregulation. But transportation safety has greatly improved over the period of deregulation. Numerous studies show that railroads, airlines, passenger vehicles, and other modes of transportation are vastly safer today than they were in the 1970s. McGarity acknowledges some of these statistics at the beginning of the chapter, but by the end he has forgotten them, and instead pronounces deregulation a disaster for safety. Nor does he acknowledge the economic benefits from transportation deregulation, which have been extensively documented by economists.

Similar points can be made about other chapters, for example, the chapter on workplace safety, which provides a tendentious picture of mine safety being utterly neglected, when in fact safety has steadily improved (as shown by the graph above). The fatality rate dropped from 0.200 (1970) to 0.059 (in 1980) to 0.016 (in 2010) fatalities per 100,000 workers in coal mines. Certainly, stricter regulation would have caused the fatality rate to drop even further, but would it have been worth the cost? No answer is provided.

Another lurking question is the extent to which deregulation actually took place. As Farber notes, the evidence is often equivocal. The sheer number of rules has greatly increased; budgets are a more complex story, but private rights of action have also become more important. When rate regulation in the railroad and telecommunications sectors were eliminated, it was also thought necessary to introduce regulations to ensure free entry, leading to quite complex regulatory regimes. Airline safety was never deregulated; the fear was that price competition would lead to less safe airlines. What exactly deregulation is, and whether it has had good or bad effects, are important questions. We’ll need to wait for another book for the answers.

Response to Will’s response to my class 4 comments

Will’s post is here.

1. Will has on several occasions argued that when a critic points out a particular defect X or Y in originalism, the critic must also show that some other interpretive methodology does not suffer from that defect, or is not otherwise inferior to originalism. It takes a theory to beat (or outrun) a theory.

This is not exactly right, though it contains an element of truth. Some theories are so bad that one can condemn them without comparing them to others. The theory that justices should consult the Zodiac in order to resolve disputes is one. At some point, we will need to examine alternative theories and see how they measure up to originalism. But in the meantime, it is pragmatically implausible to insist that one must constantly juggle all the theories at once (how many?) in order to be justified in pointing out a problem with one of them.

2. Will says “I see our government strictly following the founding-era document a huge amount of the time.” The modern system of governance in this country is vastly different from what existed in the eighteenth and nineteenth centuries. If it is consistent with the text, that can only be because the text is so vague and full of holes, undefined terms, and so on. Any style of originalism that can accommodate the current system of government has hardly any constraining force at all.

3. Will says “I think many (though not all) invocations of originalism are sincere.” Frank Cross’ book is the most rigorous effort to test this hypothesis and he finds no evidence that originalism constrains justices. It may be, as Cross suggests, that this reflects motivated reasoning rather than insincerity, but the effect is the same. This is also a problem for Will’s claim in a more recent post that originalism can constrain judges.

Ancient Roman religious practices: a correction

I received an email from a former student with the promising subject line “entrails.” It reads:

I received an email from a former student with the promising subject line “entrails.” It reads:

You mentioned in your post on Originalism class 4 that Roman priests would examine bird entrails in preparation for great political events. Actually, a Roman haruspex would examine the entrails (usually the liver) of any particular sacrificial animal, which could have been poultry if the offeror was poor, but was most commonly sheep. An augur’s method of divination, by contrast, involved watching the skies and interpreting the birds’ flight paths– most famously, when Romulus and Remus determined that Rome was to be founded on the Palatine hill rather than the Aventine when Romulus saw twelve auspicious birds in the sky, while Remus saw only six.

In one of his lesser known letters, Cicero told of an augur who complained that haruspices lacked empathy. A haruspex who overheard the comment angrily dismissed augury as “legalistic argle-bargle.”

How much has executive power increased?

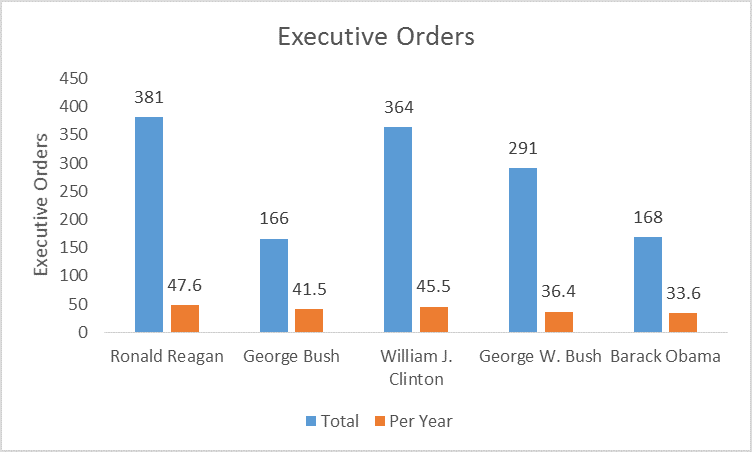

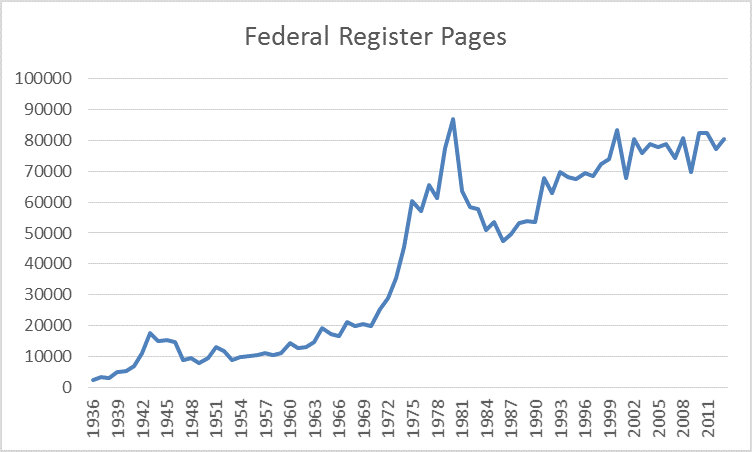

As Andrew Rudalevige notes, in response to fellow Monkey Cage inmate, Erik Voeten, presidents do not exercise power only through executive orders. Moreover, many executive orders are trivial while others are important, so one can learn only so much from their absolute numbers. For that reason, it is important to look at other measures of executive power. The graph above shows the number of pages in the Federal Register each year to provide a rough sense of regulatory activity of the executive branch. For many reasons, this measure is extremely crude, but it reinforces two important points: that executive power has increased dramatically since World War II, and that in recent years any particular president such as Obama or Bush does not act much differently from his predecessors.

As Andrew Rudalevige notes, in response to fellow Monkey Cage inmate, Erik Voeten, presidents do not exercise power only through executive orders. Moreover, many executive orders are trivial while others are important, so one can learn only so much from their absolute numbers. For that reason, it is important to look at other measures of executive power. The graph above shows the number of pages in the Federal Register each year to provide a rough sense of regulatory activity of the executive branch. For many reasons, this measure is extremely crude, but it reinforces two important points: that executive power has increased dramatically since World War II, and that in recent years any particular president such as Obama or Bush does not act much differently from his predecessors.

Originalism class 4: Brown

Will says that he’s not sure whether Brown is right or wrong as a matter of original meaning, and even if it is wrong, this kind of problem–a popular case being inconsistent with an interpretive theory–is not unique to originalism. Moreover, it is a mistake to judge an interpretive theory by its moral goodness, he says.

Will says that he’s not sure whether Brown is right or wrong as a matter of original meaning, and even if it is wrong, this kind of problem–a popular case being inconsistent with an interpretive theory–is not unique to originalism. Moreover, it is a mistake to judge an interpretive theory by its moral goodness, he says.

The last point is in tension with the first, and the first point is in tension with one of originalism’s supposed advantages–that it produces determinate results. But time and again, the original meaning turns out to be obscure, and so either courts must be willing to continually reevaluate precedents as new historical research is produced (which is unacceptable from the standpoint of judicial economy and legal stability) or the original meaning loses its ability to exert influence on legal outcomes as precedent accumulates. Will says in response to Klarman’s criticisms of McConnell that it’s really hard to determine what the original meaning of the 14th Amendment is, and new evidence and analysis constantly appear more than a century later, so maybe eventually we’ll agree that Brown is consistent with the original understanding after all. But this is a defect of originalism, not a virtue.

An interpretive method that can’t account for Brown, or treats it as an epicycle, is useless. It provides no guidance to people as they decide what laws to pass and how to plan their lives, or any guidance to judges who seek conscientiously to extend the constitutional tradition.

And this is why originalism must be based on moral considerations, as all constitutional theories must be–at least, if the goal is to persuade justices to overturn precedents and citizens and politicians to support those goals. A successful constitutional theory must appeal to institutional values that people (or enough people) share; otherwise, it is a purely theoretical construct with no practical relevance. (Originalism has done as well as it has because of the support it receives from conservatives and libertarians, who find the quasi-libertarian political culture of the founding era appealing.)

I’m not sure how otherwise one derives a justification for an interpretive methodology. From some readings and some of Will’s comments, I see two possibilities. First, originalism is right just because Americans are originalists. I don’t think that’s true. Americans support Brown and will continue to do so regardless of what historians eventually show.

Second, originalism is right because we are bound by a written constitution; it’s simply the consequence of a larger commitment to constitutionalism. But the constitution in practice is just what the various branches of government agree are the rules of the game at any given time. In their hands, the founding-era document is little more than a rhetorical flourish, used strategically. That is our political culture, one that happens to require ritual obeisance to the founders. Thus would the Roman priests examine the entrails of birds in preparation for a great political event. How long would one of those priests have lasted if he really thought he could discover in those entrails the will of the gods?

A strange debate about executive power

As I note in a comment on NYT’s Room for Debate, the “executive order” imbroglio coming out of the State of the Union speech is strange. The White House told newspapers before the speech that the president planned to sling about executive orders like Zeus with his thunderbolts, and they duly reported it on their front pages. Republicans duly exploded with outrage. The speech itself has a single mention of executive orders (“I will issue an executive order requiring federal contractors to pay their federally-funded employees a fair wage of at least $10.10 an hour”). The president continues in this vein, saying that he is going to do a bunch of other extremely minor things using his existing statutory authority, though it would be better if Congress would chip in with some legislation. The resulting controversy about presidential power is entirely manufactured–by both sides. Maybe the president’s strategy was to look fierce to his supporters while not actually doing anything that might get him in trouble with Congress.

As I note in a comment on NYT’s Room for Debate, the “executive order” imbroglio coming out of the State of the Union speech is strange. The White House told newspapers before the speech that the president planned to sling about executive orders like Zeus with his thunderbolts, and they duly reported it on their front pages. Republicans duly exploded with outrage. The speech itself has a single mention of executive orders (“I will issue an executive order requiring federal contractors to pay their federally-funded employees a fair wage of at least $10.10 an hour”). The president continues in this vein, saying that he is going to do a bunch of other extremely minor things using his existing statutory authority, though it would be better if Congress would chip in with some legislation. The resulting controversy about presidential power is entirely manufactured–by both sides. Maybe the president’s strategy was to look fierce to his supporters while not actually doing anything that might get him in trouble with Congress.

Obama unbound?

Charles Barzun replies on inside/outside

Here is Charles Barzun, who gets the final word. The original paper is here; Charles’ response; my reply; Adrian’s reply.

In my response to their thought-provoking paper, I argued that the supposed fallacy that Eric and Adrian identify depends on empirical claims about judicial behavior in a way that they denied. My point was that although the targets of their critique may make different assumptions about what motivates judges and what motivates political actors in the other branches, those assumptions are not necessarily “inconsistent” if the different treatment is justified by the different institutional norms and constraints that operate on judges, as compared to other political actors (which I consider to be at least in part an empirical question). Neither this point – nor any other one I made – depends on controversial claims about the nature of truth or logical consistency, postmodern or otherwise.

In their brief rejoinders, Eric and Adrian continue to insist that their argument does not depend on any empirical claims about what motivates judges. But in so arguing, each of them contradicts himself and concedes my original point in the process.

Adrian first says of the kind of argument they were examining that “it is caught in a dilemma — it can survive filter (1) only by taking a form that causes it to be weeded out by filter (2).” I take Adrian to mean here that the argument can avoid the charge of inconsistency (filter (1)) but only at the cost of making implausible empirical assumptions about how judges act (filter (2)). But then he goes on to say that by the time we are considering the empirical question (filter (2)), “the fallacy has already dropped out by that point; it is not affected at all by whatever happens in the debate at the second stage.” But how can it be that the fallacy is “not affected” by what happens at the second stage if, as he has just said, it can “survive filter (1)” by making empirical claims that filter (2) then “weeds out”?

Eric makes the same error in even more efficient fashion. He says, “we sometimes argue that they escape the problem only by making implausible arguments. But the inside-outside problem does not depend on our skepticism about these specific arguments being correct.” Eric’s second sentence contradicts his first. He acknowledges that the targets of their critique can “escape the problem” (of inconsistency) by making what he considers to be implausible empirical arguments. But then he insists that their charge of inconsistency does not depend on those empirical arguments about judicial behavior being implausible. But how can that be the case if, as he has just said, the scholars can avoid inconsistency if those empirical arguments are correct?

I don’t think I’m the postmodernist in this debate.

Adrian Vermeule responds to Charles Barzun on the inside/outside fallacy

I generally follow Johnson’s advice never to respond to critics, but this is the season for breaking resolutions. So let me offer a brief rejoinder to Charles Barzun’s response to the Posner/Vermeule paper on the Inside/Outside Fallacy; both are recently published by the University of Chicago Law Review.

Eric and I suppose that successful arguments (in constitutional theory, inter alia) must pass through two separate, independent and cumulative filters: (1) a requirement of logical consistency (the inside/outside fallacy is one way of violating this requirement); (2) a requirement of substantive plausibility (not ultimate correctness).

With respect to some of the particular arguments we discuss in the paper, we say that the argument is caught in a dilemma — it can survive filter (1) only by taking a form that causes it to be weeded out by filter (2). Now in some of those cases, I take it, Charles disagrees with us that the argument fails the second filter. He is of course entitled to his views about that. But the inside/outside fallacy — which is the first filter — is strictly about the logical consistency of assumptions, not their plausibility. Thus the fallacy has already dropped out by that point; it is not affected at all by whatever happens in the debate at the second stage. It’s just a muddle to say that because Eric and I do happen to have substantive views about what counts as plausible for purposes of the second filter, we are therefore smuggling substantive content into the first filter. Not so — unless one subscribes to the postmodern view that logical consistency is itself a substantive requirement, thereby jettisoning the distinction between validity and truth. (In some passages, Charles seems willing to abandon himself utterly to that hideous error, but for charity’s sake we ought not read him so, if we can help it).

So when Charles says that the inside/outside fallacy smuggles in substantive assumptions, I think that’s a confusion that arises from failing to understand the distinction between the two filters. The reader of Charles’s piece should be alert for skipping to and fro between these distinct questions of logical consistency and plausibility.

Adrian Vermeule

(And see Eric’s earlier reply.)

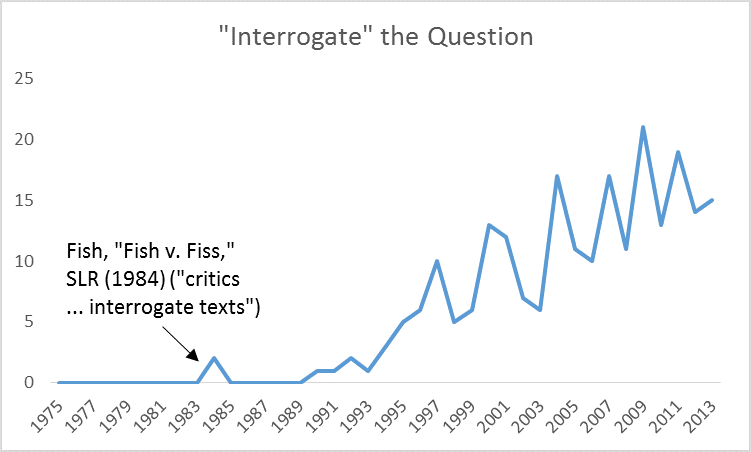

The “interrogate” bubble in legal scholarship

The grudging case for making a deal with Snowden

I examine the grudging case at Slate.

Voting rules in international organizations

International organizations use a bewildering variety of voting rules. Courts, commissions, councils, and the General Assembly use majority rule. The WTO, the International Seabed Authority, the IMF, the World Bank, and the Security Council use various types of supermajority rule, sometimes with weighted voting, sometimes with voters divided into chambers that vote separately, sometimes with vetoes. The voting rules in the EU defy any simple description. Al Sykes and I try to bring some order to this mess in our new paper.

Melissa Schwartzberg’s Counting the Many

This book provides a nice history of the evolution of voting rules, with emphasis on supermajority rules, but is less successful in its attempt to argue that supermajority rule should presumptively be replaced with majority rule. Schwartzberg simultaneously argues that majority rule is superior to supermajority rule because the latter creates a bias in favor of the status quo, and acknowledges that a status quo bias is justified so that people can plan their lives. Her solution–what she calls “complex majoritarianism”–is the manipulation of majority rules so that they are applied to favor–the status quo. For example, she favors constitutional amendment requiring a temporally separated majority vote in the legislature (plus subsequent ratification), but the effect is just bias in favor of the status quo except in the unlikely event that preferences don’t change. She argues that this approach advances deliberation but deliberation can be encouraged in other ways and in any event the status quo bias is not resolved.

This book provides a nice history of the evolution of voting rules, with emphasis on supermajority rules, but is less successful in its attempt to argue that supermajority rule should presumptively be replaced with majority rule. Schwartzberg simultaneously argues that majority rule is superior to supermajority rule because the latter creates a bias in favor of the status quo, and acknowledges that a status quo bias is justified so that people can plan their lives. Her solution–what she calls “complex majoritarianism”–is the manipulation of majority rules so that they are applied to favor–the status quo. For example, she favors constitutional amendment requiring a temporally separated majority vote in the legislature (plus subsequent ratification), but the effect is just bias in favor of the status quo except in the unlikely event that preferences don’t change. She argues that this approach advances deliberation but deliberation can be encouraged in other ways and in any event the status quo bias is not resolved.

The book is right to emphasize historical, empirical, and institutional factors as opposed to the sometimes tiresome analytics of social choice theory–as emphasized by this enthusiastic review here–but Schwartzberg’s argument against supermajority is ultimately analytic itself, based on abstract considerations of human dignity, rather than grounded in history or empiricism. The empirical fact that the book doesn’t come to terms with is that supermajority rule is well-nigh universal, not only in constitutions but virtually every organization–clubs, corporations, civic associations, nonprofits–where people voluntarily come together and use supermajority rules to enhance stability and to prevent situational majorities from expropriating from minorities.

Response to Simon Caney on feasibility and climate change justice

Simon Caney argues, in a welcome departure from the usual claims in this area of philosophy, that negotiating a climate treaty is not just a matter of distributing burdens fairly, but also requires a climate treaty that countries are actually willing to enter–“feasible,” to use the word that David Weisbach and I use in our book Climate Change Justice.

Simon Caney argues, in a welcome departure from the usual claims in this area of philosophy, that negotiating a climate treaty is not just a matter of distributing burdens fairly, but also requires a climate treaty that countries are actually willing to enter–“feasible,” to use the word that David Weisbach and I use in our book Climate Change Justice.

But he rejects our argument that the only feasible treaty is one that makes every state better off by its own lights relative to the world in which no treaty exists, and that if advocates, ethicists, and (more to the point) government officials insist that a treaty be fair (in the sense of forcing historical wrongdoers to pay, redistributing to the poor, or dividing burdens equally), there will never be such a treaty.

He says that if a government refuses to enter a fair but burdensome treaty because it knows that voters will punish it for complying, then that just means that voters have a duty not to punish the government, and instead to compel the government to act according to the philosopher’s sense of morality. But because voters don’t recognize such a duty, we are back where we started. His underlying assumption seems to be that voters will cause governments to act morally; ours is that voters will (at best) acquiesce in a treaty that avoids harms that are greater than the costs of compliance. So while, unlike many philosophers, he recognizes a feasibility constraint, he waters it down beyond recognition.

The EU’s recent backpedaling on climate rules shows once again that feasibility, not ethics, should be a necessary condition for proposals for distributing the burdens of a climate treaty.

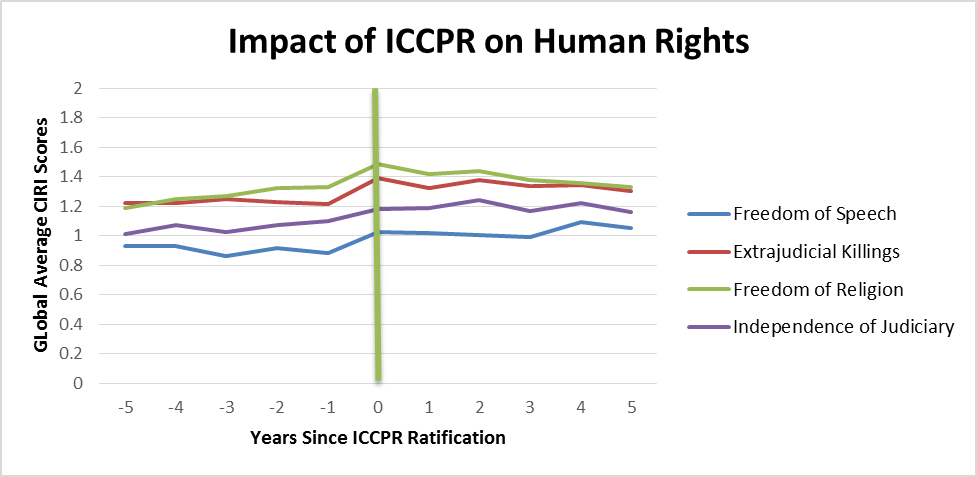

Impact of International Covenant on Civil and Political Rights

Source: Cingranelli-Richards Human Rights Dataset (0 (bad) to 2 (good)).

Source: Cingranelli-Richards Human Rights Dataset (0 (bad) to 2 (good)).

Reply to Barzun on Inside/Outside

Charles Barzun argues that Adrian Vermeule and I smuggled substantive assumptions into what we characterized as a methodological criticism about legal scholarship and judging. I don’t think he’s right. Our major example is the judge who says that he may settle a dispute between the executive and legislature based on the Madisonian theory that “ambition must be made to counteract ambition.” In appealing to Madison, the judge implicitly puts into question his own impartiality, as Madison was referring to the judiciary as well as the other branches. We didn’t argue that judges should never resolve disputes between the executive and the legislative, just that the judge (or, more plausibly, an academic) must supply a theory that does not set the judge outside the system.

Charles Barzun argues that Adrian Vermeule and I smuggled substantive assumptions into what we characterized as a methodological criticism about legal scholarship and judging. I don’t think he’s right. Our major example is the judge who says that he may settle a dispute between the executive and legislature based on the Madisonian theory that “ambition must be made to counteract ambition.” In appealing to Madison, the judge implicitly puts into question his own impartiality, as Madison was referring to the judiciary as well as the other branches. We didn’t argue that judges should never resolve disputes between the executive and the legislative, just that the judge (or, more plausibly, an academic) must supply a theory that does not set the judge outside the system.

Barzun thinks that we make “controversial claims about the nature of law and how judges decide cases,” in particular, that we make excessively skeptical assumptions about judicial motivation. I don’t think we do, but the major point is that our argument in this paper does not depend on such claims.

For example, suppose the judge responds to our argument by saying that he is in fact public-spirited, and only presidents and members of Congress are ambitious. That may well be so, but then he must abandon Madison’s argument and make his own as to why these are plausible assumptions about political behavior. If one shares the judge’s optimism about human nature, one might believe that the president and members of Congress are also public-spirited, in which case judicial intervention in an inter-branch clash may not be warranted. The judge can also, of course, make arguments about different institutional constraints, public attitudes, and so on, which may justify judicial intervention. But that is a different theory, different from the Madisonian theory that he and many scholars propound.

In the course of describing the various ways that scholars respond to the inside-outside problem, we sometimes argue that they escape the problem only by making implausible arguments. But the inside-outside problem does not depend on our skepticism about these specific arguments being correct, and our real point is that most of the time scholars and (especially) judges do not try to make such arguments but instead ignore the contradictions in which they entangle themselves.

David Bosco’s Rough Justice

David Bosco‘s new book tells the history of the International Criminal Court. It is nicely done and will be a reference for everyone who does work in this area. The conclusion will not surprise any observers: the ICC survived efforts at marginalization by great powers but only by confining its investigations to weak countries. Thus, the ICC operates de facto according to the initial U.S. proposal, rejected by other countries, to make ICC jurisdiction conditional on Security Council (and hence U.S.) approval.

David Bosco‘s new book tells the history of the International Criminal Court. It is nicely done and will be a reference for everyone who does work in this area. The conclusion will not surprise any observers: the ICC survived efforts at marginalization by great powers but only by confining its investigations to weak countries. Thus, the ICC operates de facto according to the initial U.S. proposal, rejected by other countries, to make ICC jurisdiction conditional on Security Council (and hence U.S.) approval.

Bosco seems to think this equilibrium can persist, but the book only touches on (perhaps because it is too recently written) the growing resentment of weak countries, above all African countries, which have woken up to the fact that the Court is used only against them, and have begun to murmur about withdrawing. The Court now faces political pressure to avoid trying not only westerners, but also Africans. Meanwhile, the Kenya trials are heading toward debacle, while the ICC is unable to address international criminals like Assad. The Court’s room to maneuver is shrinking rapidly, and one wonders whether it can sustain its snail’s pace (one conviction over a decade) much longer. The book might have been called “Just Roughness.”

Originalism class 3: precedent

What should originalists do about precedent? If they respect it, then the original meaning will be lost as a result of erroneous or non-originalist decisions that must be obeyed. if they disregard it, then Supreme Court doctrine is always up for grabs, subject to the latest historical scholarship or good-faith judicial disagreement (as illustrated by the competing Heller opinions). One can imagine intermediate approaches: for example, defer only to good originalist precedents, or defer only when a precedent has become really really entrenched. But while such approaches may delay the eventual disappearance of original meaning behind the encrustation of subsequent opinions, they cannot stop it, sooner or later. Our readings–Lawson, McGinnis & Rappaport, Nelson–provide no way out that I can see. (Lawson dismisses the problem, while the others propose intermediate approaches.) Originalism has an expiration date.

What should originalists do about precedent? If they respect it, then the original meaning will be lost as a result of erroneous or non-originalist decisions that must be obeyed. if they disregard it, then Supreme Court doctrine is always up for grabs, subject to the latest historical scholarship or good-faith judicial disagreement (as illustrated by the competing Heller opinions). One can imagine intermediate approaches: for example, defer only to good originalist precedents, or defer only when a precedent has become really really entrenched. But while such approaches may delay the eventual disappearance of original meaning behind the encrustation of subsequent opinions, they cannot stop it, sooner or later. Our readings–Lawson, McGinnis & Rappaport, Nelson–provide no way out that I can see. (Lawson dismisses the problem, while the others propose intermediate approaches.) Originalism has an expiration date.

Another issue is raised by McDonald–the gun control case. In Heller, Scalia disregards precedent in order to implement what he thinks was the original understanding of the Second Amendment. In McDonald, he writes a concurrence that cheerfully combines Heller with the anti-originalist incorporation decisions. Why doesn’t he feel constrained to revisit those decisions? Instead, he joins a holding that generates constitutional doctrine that in practical terms is more remote from the original understanding (gun rights that constrain the states) than he would have if he had gone the other way in Heller (no gun rights at all), given the greater importance for policing of the state governments both at the founding and today. This is akin to the second-best problem in economics: partial originalism–originalism-and-precedent–may lead to outcomes that are less respectful to original understandings than non-originalist methodologies would.

*** Will responds; his VC colleague David Bernstein’s post about the clause-by-clause problem is also worth reading.

A simple (and serious) puzzle for originalists

All originalists acknowledge the “dead hand” problem, and so all agree that the normative case for originalism depends on the amendment procedure being adequate for keeping the constitution up to date. Or at least all of the originalists I have talked to (n=1). Yet it can be shown that the Article V amendment procedure is unlikely to be adequate, and the probability that it is adequate across time is virtually nil.

The reason is that outcomes produced by voting rules depend on the number of voters (and also the diversity of their interests but I will ignore that complication since it only reinforces the argument). An easy way of seeing this is to consider the strongest voting rule—unanimity—and imagine that people flip a coin when they vote (the coin flip reflects the diversity of their interests, not a failure to vote their interests), and can agree to change a law only when all voters produce heads. The probability of achieving unanimity with a population of 2 is 1/4 (only one chance of two heads out of four possible combinations), with a population of 3 is 1/8, and so on.

For a more rigorous formulation, consider a spatial model from 0 to 1, with a 2/3 supermajority rule. The status quo is chosen randomly (on average 1/2), and the population chooses whether to change it. If the population is 3, voters will change the outcome with probability of (near) 1, because 2 of the 3 people will draw an outcome greater than or less than 1/2 with probability of (near) 1. If the population is 6, there is now a non-trivial probability that 3 of the 6 people will be on one side of 1/2, and 3 people on the other side, so a 2/3 majority (4 people) will be unable to change the status quo.

The U.S. population has increased from 4 million at the time of the founding to 300 million today. If the amendment rules were optimal in 1789, they are not optimal today. If they are optimal today, then they won’t be optimal in a few years. Originalism with a fixed amendment process can be valid only with a static population.

This argument comes from Richard Holden, Supermajority Voting Rules; and Rosalind Dixon and Richard Holden, Constitutional Amendments: The Denominator Problem (who supply empirical evidence).

There is a related argument that one can make based on the Buchanan/Tullock analysis of optimal voting rules. Thanks to Richard for a helpful email exchange.